- Profitable AI Blog

- Posts

- The State of AI 2025 (Through a Business Lens)

The State of AI 2025 (Through a Business Lens)

What actually happened this year, and what it means for your business

If you're following the feeds on social media, you'd believe AI has gone CRAZY and we'll all be jobless soon. The reality, though, is that true progress and viral clips on social media aren't an exact overlap.

That's why, starting this year, I'm doing an annual State of AI writeup to help you cut through the noise. This isn't about predicting what's next. It's about recapping what happened, what works, and where things stand today.

I'm organizing this around the 5 AI Modes – the capabilities that matter when you're trying to get work done.

Let's dive in!

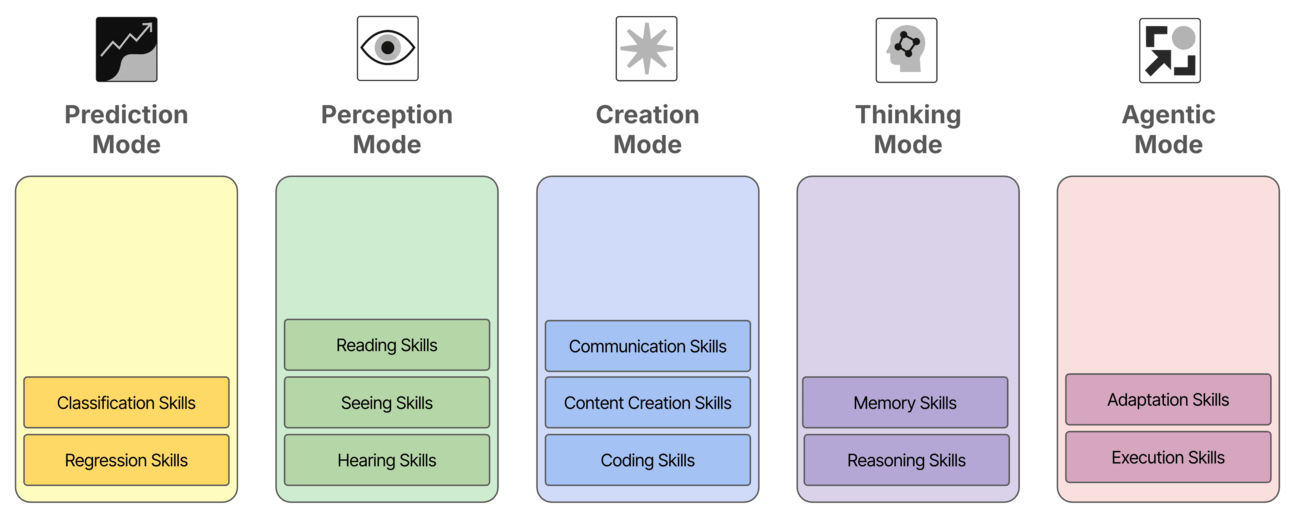

5 AI Modes – The Lens I Use To Look At Things

Before we look at what happened in 2025, here's a quick refresher on the framework I use to help non-AI people make sense of AI capabilities:

1. Prediction: Classify, forecast, anticipate what's next

2. Perception: See, hear, and read the world

3. Creation: Generate new things (text, images, code)

4. Thinking: Reason step-by-step, remember context

5. Agentic: Take action autonomously

(If you want the full breakdown of each mode with examples and tools, check out my deep dive on the 5 AI Modes.)

The State of AI (By Mode)

Now let's see what actually happened in 2025, mode by mode.

Prediction Mode

This is what most people associate with AI: predicting stuff, making forecasts, learning from data. The classic machine learning applications: churn prediction, fraud detection, sales forecasting, anomaly detection, you name it.

The thing is: AI technology in this mode hasn't really made a major leap in the last 10 years.

The last big invention here was XGBoost, which came out in 2014. It uses a family of algorithms called boosted trees to make pretty accurate predictions on a large variety of (tabular) datasets. Turns out, we still use the same algorithms for forecasting and classification that we used back then.

I hear this very often: "We have GPT-5 now, surely we can forecast sales better?" No. The tech behind forecasting is completely separate from large language models. It's still traditional machine learning—regression, classification, time series analysis. Same old stuff.

What makes or breaks prediction AI? Data quality. If you don't have clean, structured data, you won't get a good model. Period. No amount of ChatGPT hype changes that equation.

These use cases are still relevant for business. But they're not part of the generative AI revolution everyone's talking about.

Perception mode

This is where AI learns to see, hear, and read the world. Think document analysis, image recognition, audio transcription—anything where you're feeding unstructured data into a system and expecting it to make sense of it.

Language Understanding (Reading)

Here we actually saw real progress. GPT-4o from last year scored 44.8 on language understanding benchmarks. Today's best models like Claude-Sonnet 4.5 hit 76.2, nearly doubling in 12 months! With reasoning, these models score even higher but we’ll cover reasoning in a bit.

What does this mean practically? AI is now much better at extracting information from text and getting the nuances. Here’s an example of a text that an LLM in 2025 can process better than in 2024:

Overall, Claude leads the pack here for non-reasoning models. If you need fast text understanding, that’s the place to go.

Visual Understanding (Seeing)

Plot twist: the big breakthrough happened last year, not this year.

Source: Hugging Face VLM leaderboard

When GPT-4o went multimodal in 2024, it jumped from basically nothing to a benchmark average of 72.8 across various vision tasks. Big breakthrough. This year, we're at 79.6. That's progress, but not exactly another revolutionary leap. Google dominates visual AI thanks to unlimited YouTube training data.

For business, that means 2024 mostly unlocked what you need. Multimodal document processing – where AI looks at the actual image of a document rather than just OCR text – is already significantly better than most text-only approaches.

Audio Understanding (Hearing)

Let’s focus on Speech to text here as the major audio understanding task. All the major players are bunched together at 80-90% accuracy. That means every 10th word might be wrong – which fine for meeting notes, but might be problematic for legal or medical transcription. You’ll still need to come up with a custom benchmark and model assessment for these use cases.

Source: Artificial Analysis

A main differentiator is Price. Whisper via Groq cost $0.67 per 1,000 minutes while AWS Transcribe charges $24 per 1,000 minutes. If you're doing high-volume transcription, that's a 35x difference in cost for very similar quality. Meeting transcripts, galore!

Creation Mode

Now we’re getting to where things get wild. Creation mode is the AI that everyone's been playing with: generating text, images, video, and code. But the real story isn't just that outputs got better – it's how they got better.

The Multimodal Breakthrough

A year ago, image generation was a two-step process. You'd describe what you wanted in text, DALL-E would generate an image, but the AI couldn't "remember" what the image actually looked like.

Ask it to put your book cover on a beach? It would generate a different book cover based on a text description.

ChatGPT in 2024

Today’s native multimodal models can see and generate in one pass. I can upload my book cover image, ask for it on a mountain, and the AI maintains perfect visual consistency. The model ingests the actual image, not a description of it, and outputs an exact visual match in a new context.

I’ve described some business use cases for this in this post on AI Image generation.

Now the same breakthrough happened with video. Google kicked it off with Veo 3, and then we saw Sora 2 (released this week) doesn't just generate video—it generates video with synchronized audio. One model, end-to-end. I’ll spare you the videos here, you’ve probably seen them. If not, google “Sam Altman Skibidi Toilet”, but don’t tell me I didn’t warn you.

I’m pretty sure: in less than two years, you won't be able to distinguish AI from reality. If your business relies on photo/video as evidence (insurance claims, anyone?), start planning now.

Text & Code: The Real Winners

While everyone obsessed over image generation, code capabilities doubled. Benchmark scores went from 20-25 to 50+ in 12 months.

Source: Artificial Analysis

That’s a huge deal. We’re now seeing AI doing bug fixes, pull request automation, full feature implementations from issue descriptions – with minimal human intervention.

To be clear: if you or your devs are still coding without AI assistance, you're at a serious disadvantage!

Text generation also got an unexpected upgrade: LLMs are now really good at improving their own prompts. Tell GPT-5 "improve this prompt using the RGTD framework" and you'll get a prompt that gives you dramatically better outputs. The models have learned meta-skills.

Oh, and here's something wild: LLMs got casually more persuasive than humans. Studies show they can change people's minds more effectively than human conversation—even with conspiracy theorists. I’m leaving you this great post by Ethan Mollick here if you like to dive deeper.

In 2025, we also learned that in blind tests, AI-generated content typically outperforms human writing. BUT: When people know it's AI, engagement drops. Make of that what you will.

Thinking Mode

This is where AI moves from "do tasks" to "understand problems". Two big capabilities here: memory (how much context can the model hold?) and reasoning (can it think through complex problems step-by-step?).

Memory: Bigger Context, Diminishing Returns

Last year, context windows were 30K-100K tokens. Today, 128K has been established as the bare minimum. (That's enough to fit an entire Harry Potter book in a single prompt.)

The most context-capable models are Grok claiming 2 million tokens, and Gemini and Claude both offering up to 1 million. GPT-5 supports "only" 400K—though GPT-4.1 goes up to 1M if you want to stay in the OpenAI world.

Source: Artificial Analysis

However, context isn't context. Performance often degrades hard after ~100K tokens. I've found the sweet spot is still under 50K tokens – models catch every nuance here and work most accurately. Load 2 million tokens and you're waiting minutes for responses that might hallucinate or forget key details anyway.

RAG: Still Relevant

Even with massive context windows, Retrieval-Augmented Generation (RAG) remains the best way to work with large knowledge bases. But the technology has evolved. Naive RAG (simple semantic search + chunking) works fine for straightforward documents. But if you have complex, hierarchical, or multi-format document collections you're quickly in "advanced RAG" territory.

That means custom retrieval logic, re-ranking, and validation loops. This isn't plug-and-play. It's custom dev work.

Source: https://arxiv.org/abs/2312.10997

If you like to dive deeper, check the “What we’ve learned after 2 years with RAG” post with Towards AI CTO Louis-François Bouchard.

Reasoning: Sometimes Useful

The release of "Reasoning" models like o1 was a big deal end of last year. But this "thinking" process isn't magic. It's essentially just throwing more compute power at generating answers to complex problems. Instead of immediately generating a response, they work through the problem step-by-step, which sometimes leads to better results.

If you compare reasoning models to their non-reasoning counterparts, you'll see performance improvements of about 20%—but the exact performance heavily depends on the task. Reasoning models excel on tasks with clear right/wrong answers—code that needs to pass tests, math problems, logic puzzles. Other tasks like creative writing perform even worse with reasoning models. There's no "correct" answer for a marketing email or blog post, so extended thinking just wastes time (and literally overthinks the problem).

Source: Artificial analysis

Agentic Mode

Now we get to the hype machine. Google, Microsoft, OpenAI—everyone's selling us agents, the autonomous AI workers.

What's an Agent, Really?

Strip away the marketing and here's a useful definition:

An AI agent is an LLM calling tools in a loop to achieve a goal.

You don't tell it how to reach the goal. You just give it the goal, some tools (APIs, databases, code execution environments), and let it figure out the path. If you’re interested, you can read a deep dive here on Understanding AI Agents here with me and AI guru Tristan Behrens

Reality Check

"Real" autonomous AI agents in production are maturing, but they're still hard to get right at scale. The demos always look amazing. But you'll quickly find yourself in the well-known rabbit hole of deep IT integration and data handling—where things get really messy really fast.

I've found that today's agents work incredibly well if you give them narrow goals with limited tools. Tasks like "Ensure incoming data matches this format using these 3-4 validation tools" can work. "Double our revenue with access to all company systems" will not.

The narrower the goal and the fewer the tools, the better your odds. The moment you expand scope, reliability craters.

Still, don't sleep on agents. They might not be the best place to start, but they're a good goal to have in mind on your integration-automation AI roadmap.

"Agentic capabilities" (which often is just how well the model can keep track of tasks and call external APIs) have really surged on the benchmarks. Gemini 2.0 Flash opened the stage earlier this year. Gemini 2.5 Pro, released a few months later, almost tripled agentic performance. GPT-5 and Grok slightly lead the pack for now.

The companies getting agent-like behavior to work are doing it with heavy guardrails, human-in-the-loop checkpoints, and extremely constrained problem spaces.

The Online Learning Myth

While we're debunking hype: Even in 2025, no model automatically improves from new data in real-time.

If a vendor tells you their AI "learns continuously" or "gets better as you use it," they're either lying or don't understand their own product. All improvements happen in batch processing – data gets collected, then models get retrained offline. YouTube or TikTok might do online learning at scale for recommendations, but your average business AI? Not happening.

Agents are the future everyone's betting on. But that future isn't entirely here yet. For now, treat "agentic AI" as a useful framing for the goal-state of automation workflows—not as autonomous digital employees you can hire right away.

What's Coming Next

We're already 2,000 words in, so let me keep this short. No one really knows what's next (probably not even the big AI labs themselves).

But here's what we do know: The competition is shifting from capabilities to price/performance.

Last year, it was "who has the smartest model?" This year, we’re seeing "who can deliver similar intelligence for less money?"

GPT-5 launched at $2/1M tokens – cheaper than GPT-4o and WAY cheaper than o3-pro. Google casually dropped Gemini 2.5 Pro as the cheapest frontier model. I'm not sure where this race ends, but except for maybe Google, all these labs need to make money eventually. Especially OpenAI and Anthropic. While OpenAI seems to have unlimited VC cashflow (don't ask me how), Anthropic is in a more precarious position. I wouldn't be surprised if we see M&A consolidation by this time next year.

What this means for you:

Stop chasing the latest model. Performance differences are shrinking. Focus on what works for your use case.

Build your own benchmarks. General benchmarks matter less than performance on YOUR data. Start tracking now.

Price matters. A 7x cost difference for marginal gains isn't worth it. Shop around.

Open source is catching up. Smaller, specialized models often work just as well—for way less.

The era of "just use GPT-whatever and pay whatever" might be ending. I expect 2026 will be even more about optimization, and even less experimentation.

Conclusion

That's my State of AI in 2025.

Looking across all 5 modes it’s clear that we're past the 'does AI work?' and into the 'how do we make it profitable?' phase.

Some capabilities surged (Perception, Creation, Code). Some stalled hard (Prediction). And some are drowning in hype while we're trying to make them work in production (Agentic).

I'll be back with this same breakdown next year to see what actually changed versus what was just noise.

Until then—keep building, keep testing, and stay skeptical of the hype.

See you next Friday,

Tobias

Reply