- Profitable AI Blog

- Posts

- How to Run Local AI That Pays for Itself

How to Run Local AI That Pays for Itself

Plus the one workflow that already paid off for me

My DGX Spark has now been running for seven straight days. So far it hasn’t overheated, caught fire, or made me call tech support (knock on wood).

But it has changed how I approach AI automation.

Plot twist: I’ve already ordered a second one. We’ll get to the “why” in a second. For now, the Spark is humming — working through AI workflows that simply weren’t interesting (or profitable) for me to run in the cloud.

Let me show you what I mean.

⚡Sovereign AI: Grab the Recording

Thursday’s Sovereign AI Workshop walked through my complete local AI setup - hardware, software, and the exact Insightizer workflow that processes call transcripts into structured insights.

Grab the recording + get the Insightizer workflow as part of the $10K AI No-Code Bundle.

Three proven AI workflows that have added $10K+ in recurring profit for solo founders to $100M+ companies.

Part 1 – The Models Are Good Enough

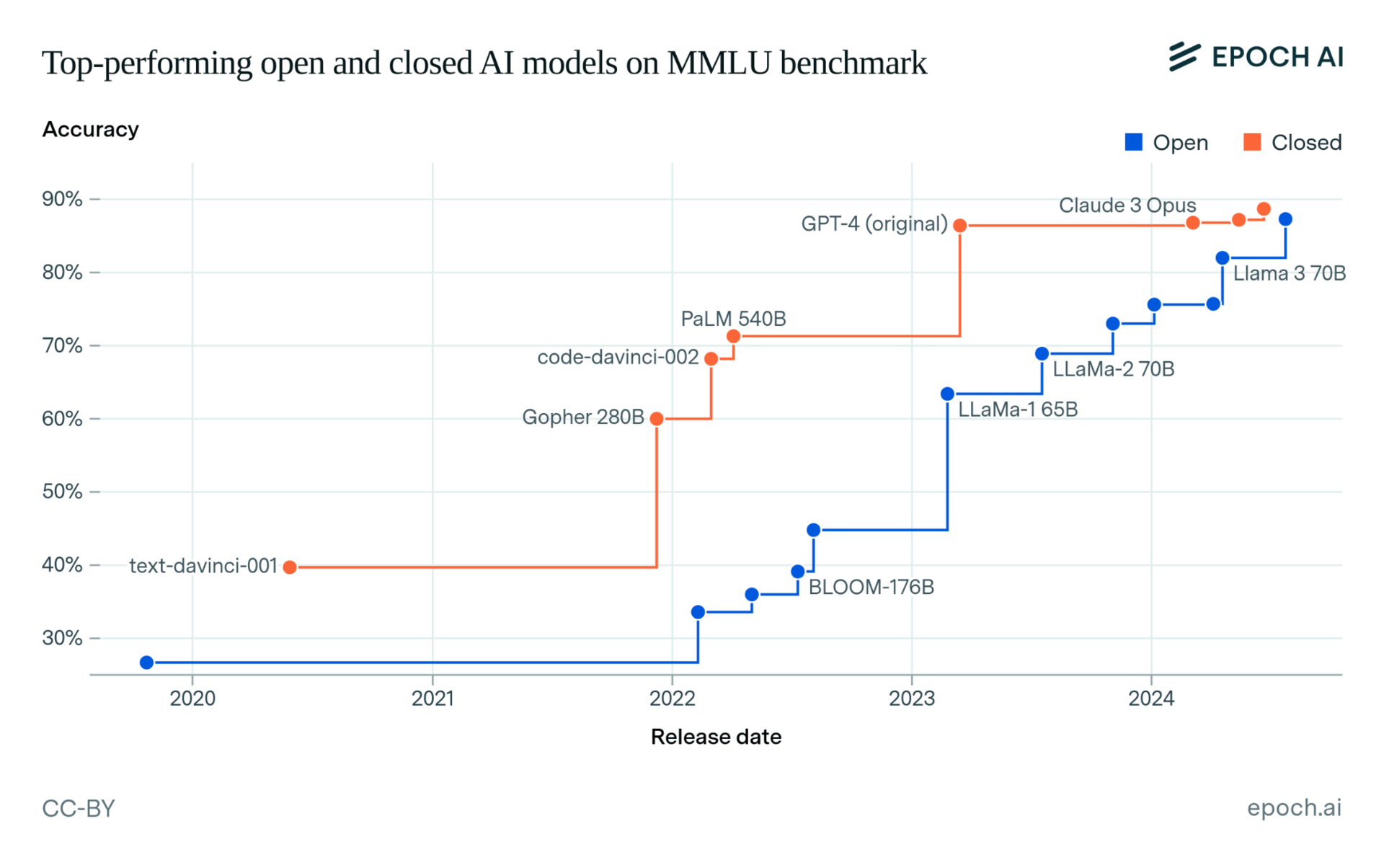

A few months ago, I wrote about the Big Open-Source AI Opportunity:

open-weights models went from “toy” → “top tier”.

Here’s the data in case you missed it:

A key inflection point was Meta’s substantial investment in open-source AI, and the success of Deepseek in proving that frontier models can be trained in small AI labs in China.

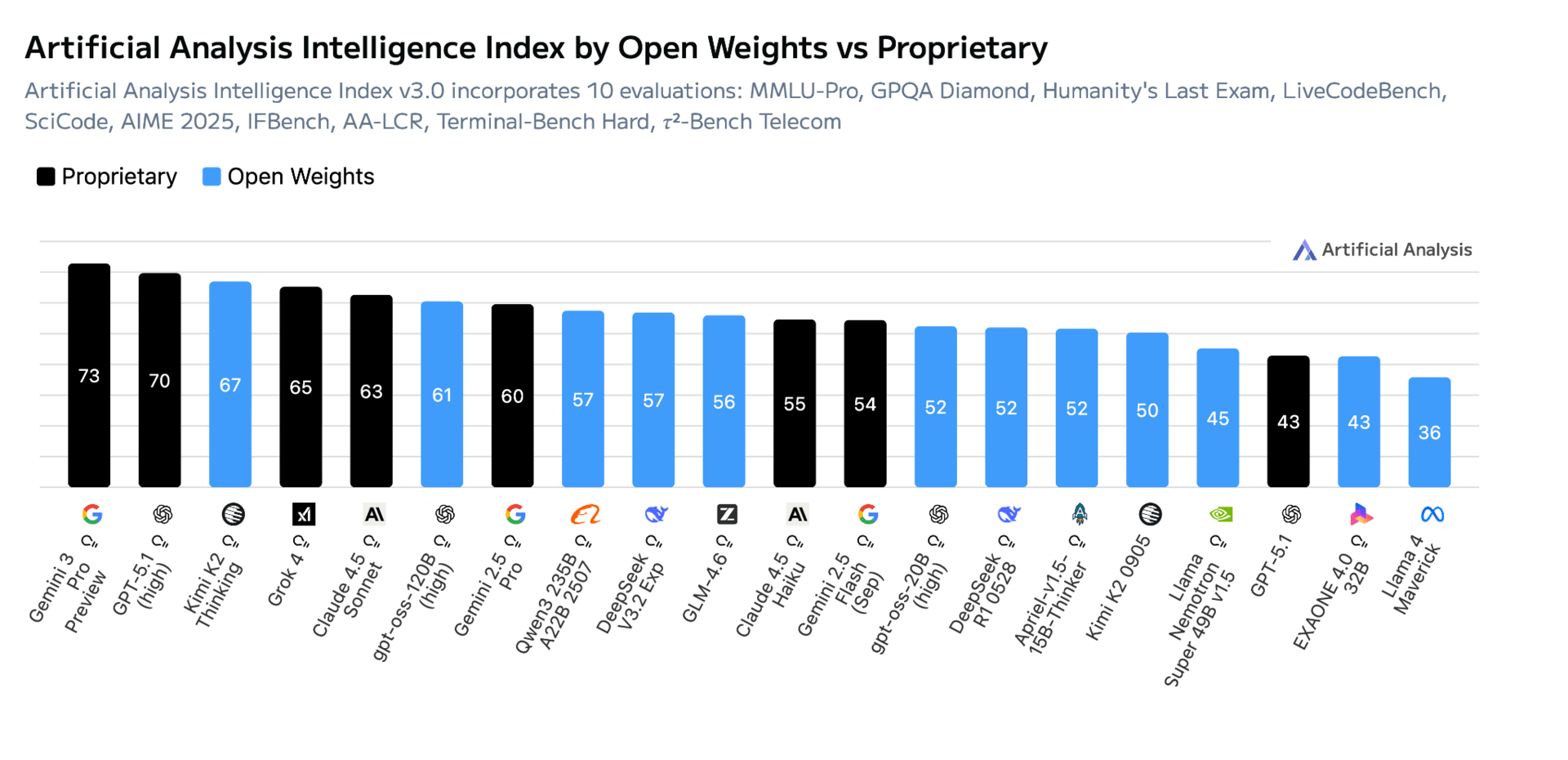

More recently, here’s the open vs. closed performance evaluation across 10+ different benchmarks as per November 2025:

The story is simple:

If you want the single best model, go to the cloud.

If you want a good-enough model, open-source has you covered.

So the model problem is solved.

That was Part 1.

Part 2 – The Hardware Got Affordable

Up until recently, running big open-source models required you to:

become a part-time Linux admin

sacrifice a room to fans louder than a jet engine

explain why the electricity bill looks like a mining operation

But something changed at the start of this year.

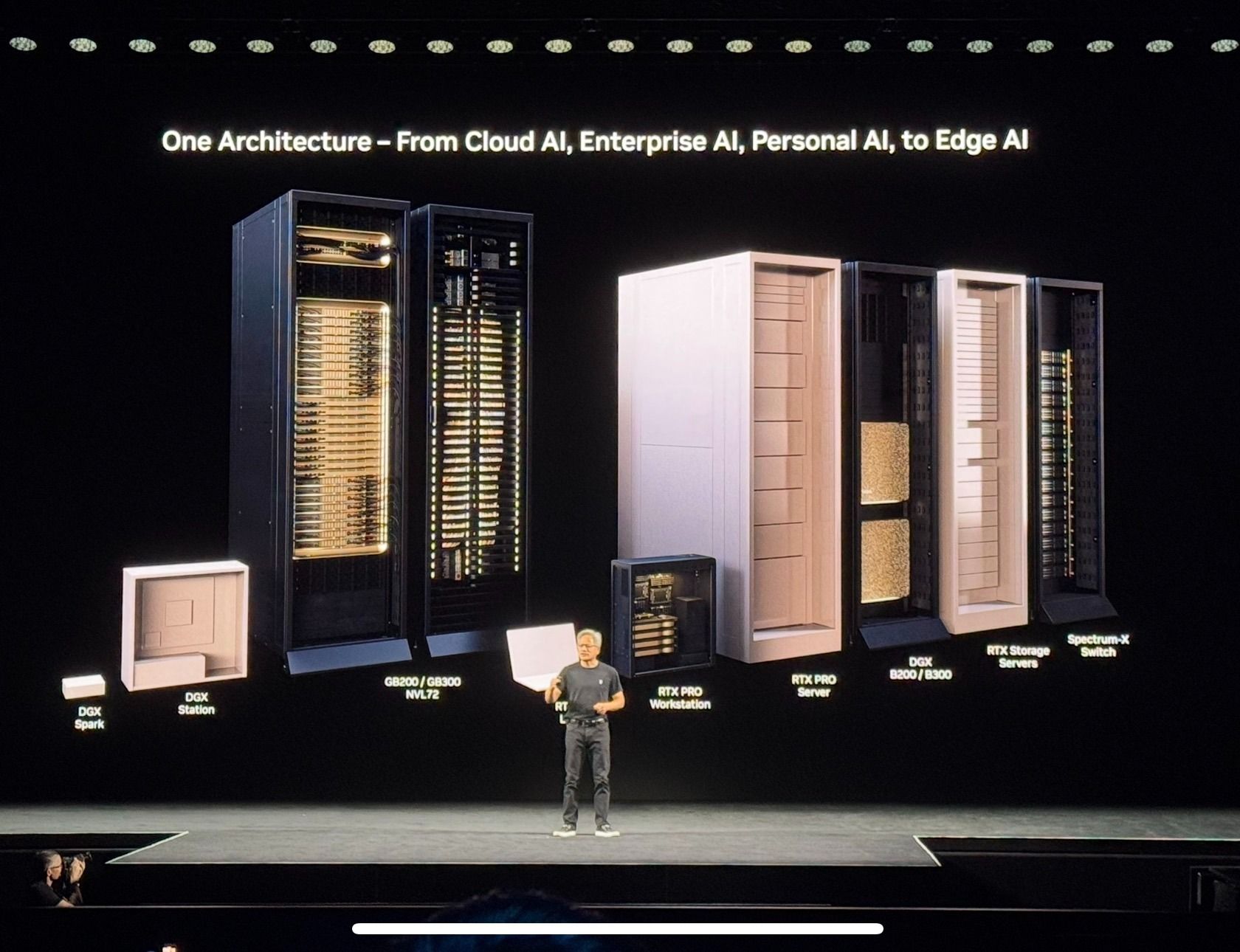

Here’s a photo I took at NVIDIA’s event in Paris:

Read it from right to left:

AI used to only live in datacenter-scale racks.

Then in enterprise towers.

Then in workstations.

And now — that tiny box on the left?

Yes, that’s the Spark I bought.

NVIDIA didn’t build that for fun. They built it because prosumer AI hardware is now an actual category.

And they’re not alone. Apple. AMD. Startup builders. Boutique system integrators.

Everyone is offering “AI mini supercomputers” you can run under your desk.

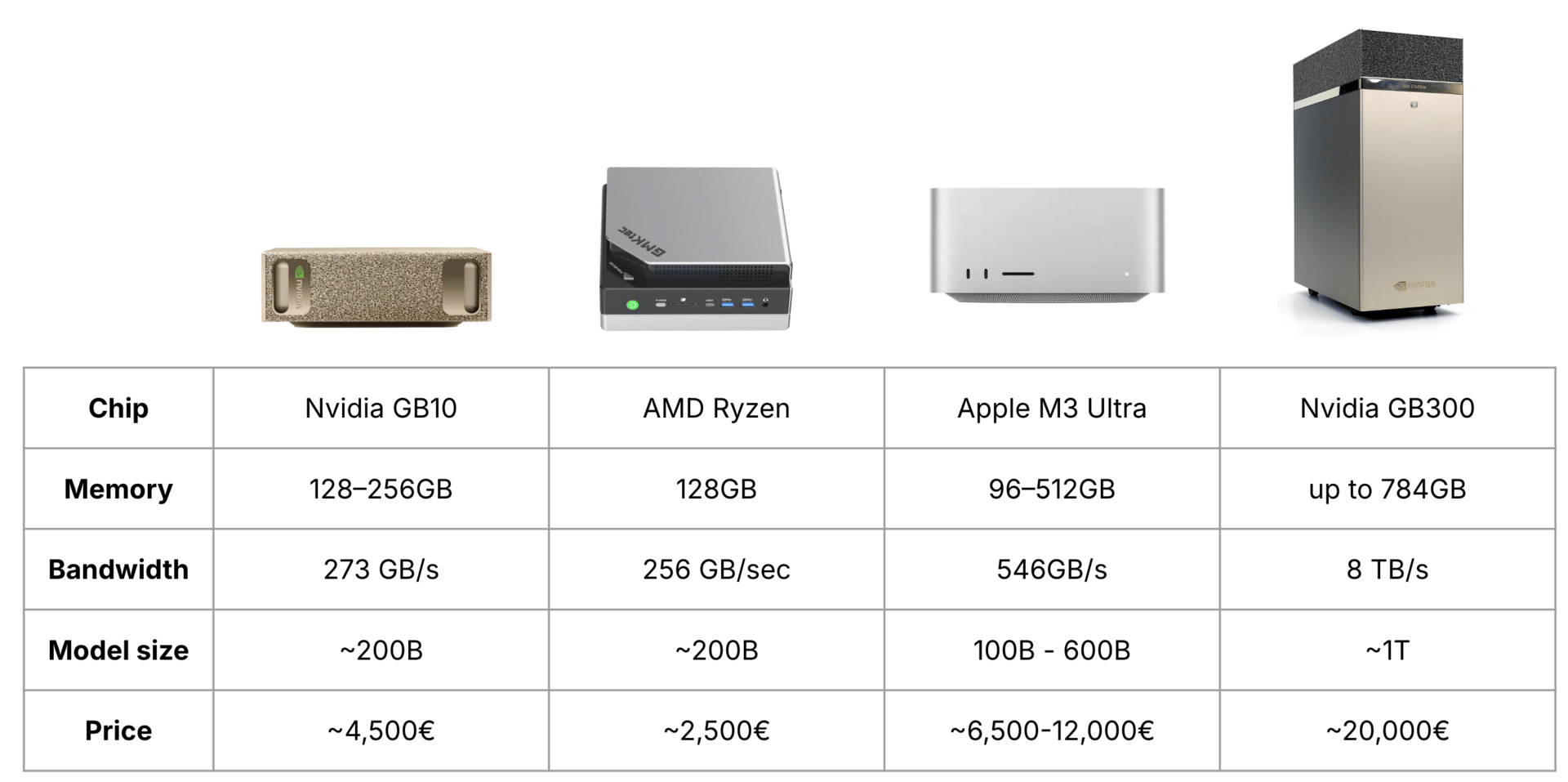

Here’s a simple comparison of 3 popular prosumer devices from – and how they compare to an entry-level, professional Nvidia workstation.

Bottom line: We now have a middle class of AI hardware.

Standardized, reliable boxes that:

easily run LLMs in the 100-200B category

have 128–512GB of unified or dedicated memory

cost €5,000 or less

This was the missing piece for years – hardware that went from ‘enterprise sized’ to ‘desk-sized’. Where local AI stops being a hobby, and starts being a profit lever instead.

Part 3 – The Software Doesn’t Suck Anymore

To be honest: Two years ago, running local AI felt like assembling IKEA furniture with missing screws.

Today? The ecosystem isn’t perfect, but it has definitely matured.

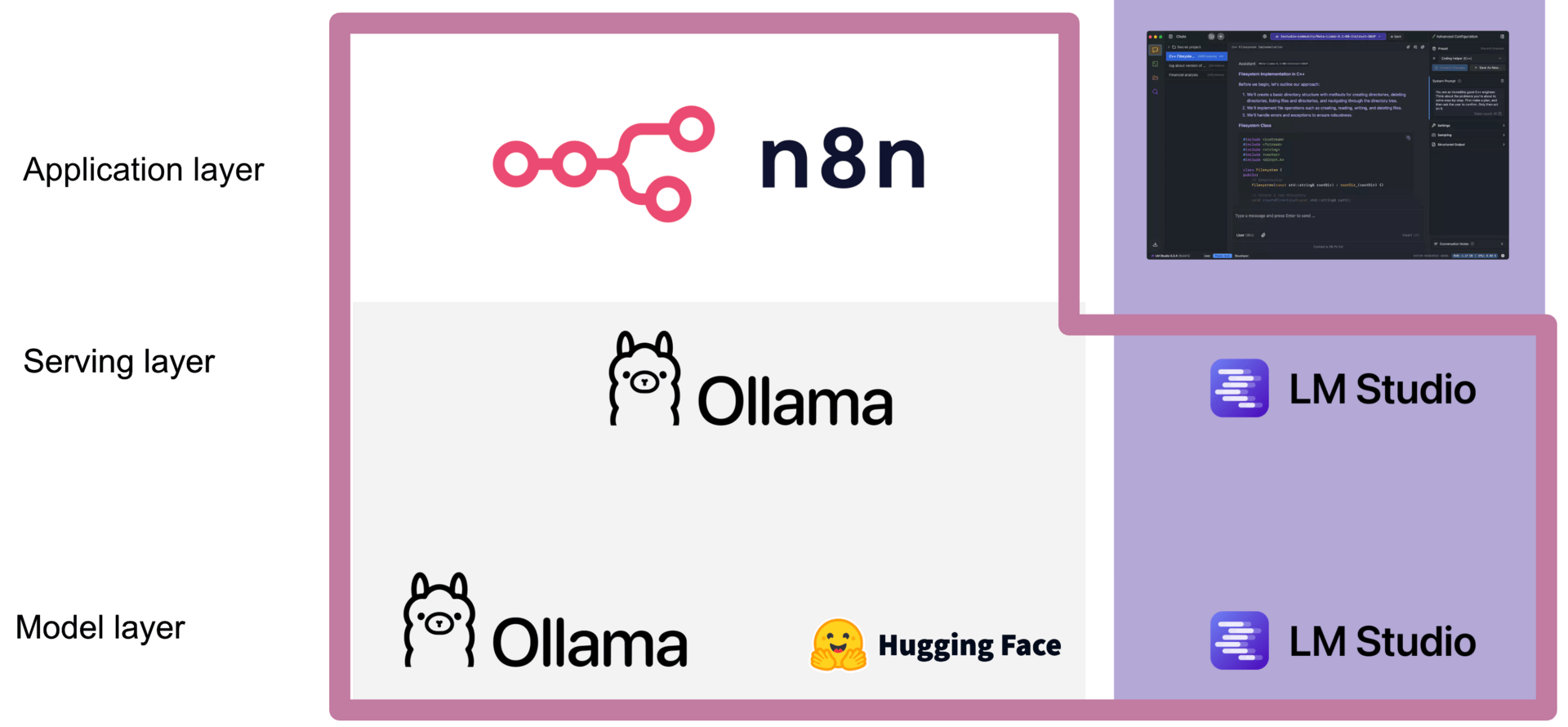

Here’s how a basic stack looks now:

Ollama → handles model management cleanly

LM Studio → one-click chat interface + serving layer

n8n → your automation platform

For the first time, running local AI feels like a real system, not a weekend science fair project. Concretely, it took me about 1 hour from unboxing my Spark to running my first local workflow (that I had already validated in the cloud).

And this is where things get interesting.

What All This Actually Unlocks

Let me give you the cleanest possible example:

I built something I call the Insightizer.

The workflow is super simple:

I take my voice recording from an everyday call (clients, meetings, etc.)

Transcribe what I said locally

It’s sent to a private inbox

n8n picks it up

A local model extracts insights → drafts structured content

Replies via email so insights pop up in my inbox a few mins later

That’s it.

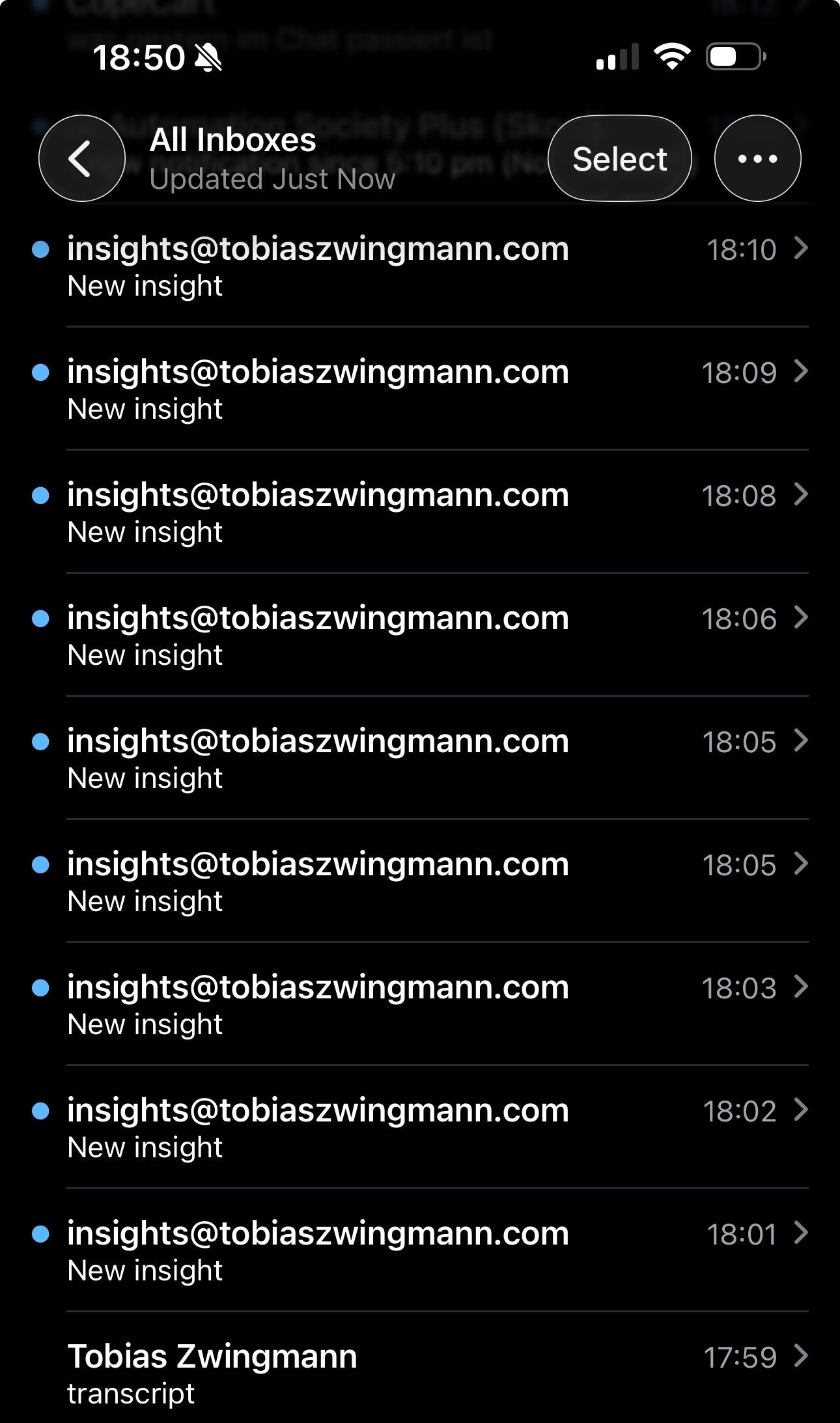

My Insightizer at work

One voice memo → structured insights → content pipeline.

Zero API calls. Zero per-request cost. Zero rate limits. Zero privacy issues.

In the last 5 days alone, my Insightizer produced 40 insights that will provide me with enough content seeds for the next few weeks.

Having this workflow alone is easily worth $5K for me.

So this is where the economics snap into place:

One workflow can pay for the entire hardware setup. The Insightizer easily did for me.

And I’ve already lined up four more workflows.

(Could I have done it with 3rd-party services? Yes, but aside from the ongoing costs, it would not have been possible to use it with memos containing client information.)

Why I Even Ordered a Second Spark

The Spark’s 128GB memory is perfect for running one larger model.

But if you want:

one reasoning LLM AND

one multimodal model

…in memory at the same time?

It gets tight.

Ollama starts offloading, reloading, slowing down, and introducing errors. So I want to test a dual-Spark setup via NVIDIA’s ConnectX high-bandwidth networking.

Best case: I unlock a “mini cluster” that runs text + image workflows in parallel.

Worst case: I have a clean setup I can use for client demos.

In any case, I’ll soon own more AI hardware than houseplants.

The Bigger Picture

If you skimmed everything above, here’s the one idea I want you to leave with:

We now don’t just have open-source models that can run locally. We now have the hardware and ecosystem that makes running them profitable.

And if I can find a workflow that makes it all pay for me, you can easily find one for yourself, too.

It doesn’t have to be content. Your profit pocket might be in finance, confidential document processing, or just speeding up time to value.

Local AI isn’t a rebellion against the cloud. It’s just a different business model:

Cloud = perfect for interactive, human-in-the-loop work

Local = perfect for automated, high-volume workflows

Put them together, you get freedom, cost control, and predictable margins.

That’s the real upgrade.

See you next Friday,

Tobias

PS: If you want the full deep dive of this – you can grab the recording of my 90-minute Sovereign AI workshop which includes the Insightizer workflow as part of my $10K AI No-Code Bundle that is available until Sunday. You can check it out here.

Reply