- Profitable AI Blog

- Posts

- Analyzing 4,000+ Survey Responses in 20 Minutes for $20 in AI Cost

Analyzing 4,000+ Survey Responses in 20 Minutes for $20 in AI Cost

(And how to save $10,000 in consulting fees along the way)

You probably know the meme.

CEO says: "We have great customer data!"

Then this is the customer data:

Except for… this isn’t a meme.

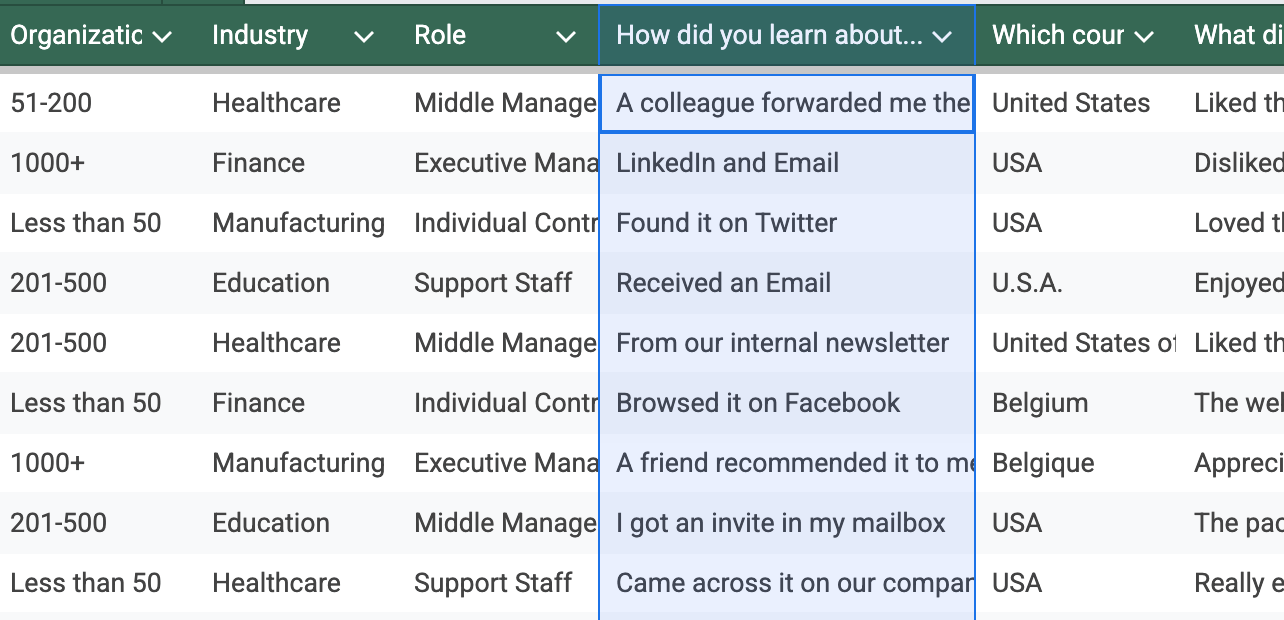

It’s a real case that actually happened to me. It was an event organizer that wanted to know how people heard about their conference and had 4,000+ records of survey data to analyze (that was very close to the screenshot above).

Today, I’ll show you how I helped this company to analyze this dataset in less than 1 day with AI, what we can learn from this and how you can access this workflow, too.

Let’s dive in!

Why Most "Great" Data Never Gets Analyzed

First of all, the case above isn’t an outlier. In fact, it’s the norm. Most business data is ugly data like this. Hard to analyze, but potentially full of valuable insights:

Customer complaints in support tickets

Sales trends scattered across messy spreadsheets

Employee feedback sitting unread in survey responses…

All the imperfect information that traditional analysis tools choke on. The fundamental problem with ugly data isn't that it's messy.

It's that cleaning it used to be more expensive than the insights it contained.

Take survey responses. People write things like "LinkedIn and referrals" or "saw it on social", maybe "Twitter". Or: "my friend mentioned it during coffee." There's no "right format" for authentic human feedback. You can't build a database schema for how people actually communicate.

Illustrative example

Traditional analysis would force these responses into predefined categories, killing the very insights that make them valuable. Or you'd spend weeks manually categorizing thousands of entries, hoping to find patterns worth the investment.

This uncertainty killed ugly data projects before AI. The effort required didn't match BI workflows, and nobody wanted to gamble weeks of analyst time on maybe finding something useful.

But AI changed this equation completely.

The $10K Analysis That Took 20 Minutes

Here's what happened with that 4,000+ record event survey.

Traditional approach would have meant hiring analysts to manually read through every response, create categories, validate the taxonomy, and build reports.

Timeline: 3-4 weeks.

Cost: $5-10K.

Risk: High chance the insights wouldn't justify the investment.

Instead, I fed all the messy responses into an AI-powered categorization system.

Timeline: ~20 minutes of AI work.

Cost: <$20 in API calls.

Risk: Almost zero.

Analyzing 2M Token in 10 minutes

(~1.5M words)

Cost for calling the AI service

The results were immediately useful:

1) We identified the top acquisition channels – and discovered that one channel was driving significantly more registrations than the marketing team expected, while another "priority" channel was underperforming.

2) We found clear audience segments within each channel. Certain types of visitors consistently came through specific channels, revealing targeting opportunities the team hadn't considered.

3) We spotted geographic and industry patterns that immediately impacted their marketing strategy.

Sample analysis – Middle management likes Emails, CEOs prefer recommendations)

And because the analysis cost were $20 (plus some of my time) instead of $10K, they could afford to explore follow-up questions. When traditional analysis costs a fortune, you get one shot to ask the right questions. When it costs pocket change, you can iterate until you find what matters.

So the organizer didn't just save money – they discovered insights they never would have looked for under the old model. They found profitable patterns hiding in data they almost didn't bother analyzing.

And that happens with any “Ugly data”.

This isn't just one success story. It's a preview of how the entire data analysis industry is changing. Here's what's really happening:

The Shift

In the "old days" (before ChatGPT), benefiting from AI meant having clean data first. Now, companies winning are the ones who figured out how to extract value from messy data fastest.

Your competitors using AI aren't smarter than you. They're working with a different cost structure. While you're debating whether ugly data analysis is worth the investment, they're already acting on profitable insights that were “good enough” from their messy spreadsheets.

AI doesn't make your data cleaner – it makes cleaning unnecessary for many insights. Instead of forcing responses into rigid categories, AI understands context, handles ambiguity, and makes judgment calls at scale. It reads "LinkedIn and referrals" and maps it to both channels. It knows "colleague mentioned it" means word-of-mouth.

Most importantly, it does this in minutes, not weeks.

This changes the entire economics of data analysis. When processing thousands of responses costs $20 plus a few hours instead of $10K plus a few weeks, you can afford to analyze data you previously ignored.

How The Category Mapper Works

The process I used isn't magic – it's a systematic approach that you can replicate with any messy text data. I call it the Category Mapper, and it works in three phases that turn chaos into structure automatically.

Phase 1: Category Finder AI reads through all your messy responses, identifies patterns, and suggests the most relevant categories. No manual taxonomy creation needed.

Phase 2: Category Mapper Each original response gets mapped to one or more categories. AI handles ambiguous entries intelligently and routes unmatched responses to an "Other" bucket.

Phase 3: Data Output Categories get transformed into analysis-ready format with new columns added to your dataset. Your original data stays untouched while gaining structured insights.

Sample mapping

The beauty is that this works for any unstructured text: survey responses, customer complaints, sales notes, support tickets.

Feed in mess, get back structure.

The Complete Category Mapper Workflow

Ready to process your ugly data in minutes instead of weeks? Here's the exact system I used, packaged as a plug-and-play workflow you can download and run today, incl. an in-depth tutorial by me.

What you'll get:

15-minute video walkthrough showing the complete setup

Ready-to-import n8n workflow file

The exact prompts and configurations I used

Step-by-step setup guide for your own data

Only available until Sep 15!

What's Next?

Once you have your categorized data, the real analysis begins. Use pivot tables and visualizations to explore patterns, or feed the structured data directly into ChatGPT for even faster insights.

The same workflow works for any ugly text data: customer feedback, sales notes, support tickets, employee surveys. The only thing that changes are the column names and insight types you're looking for.

Questions? Hit reply – I read every email.

See you next Friday!

Tobias

PS: Don’t forget – you can access the complete workflow incl. a crisp 15-min video tutorial until Monday.

Reply