- Profitable AI Blog

- Posts

- 5 Reasons Enterprise AI Fails

5 Reasons Enterprise AI Fails

And how to fix them

PwC just released a new survey of 4,454 CEOs across 95 countries.

56% saw zero financial benefit from AI. Only 12% saw revenue AND cost improvement. Yet, most CEO’s biggest worry is whether they’re transforming fast enough.

The report explains what the 12% have: AI-ready tech stacks, clear roadmaps, governance frameworks, people and cultural readiness. All true and important. But there's something the report didn't cover (the thing I think matters most):

A way to decide which AI investments are worth making before you build anything.

In my last five years of applied AI consulting – from small teams to big enterprises – I've seen the same mistakes over and over.

Here are the 5 reasons most enterprise AI investments fail, and how to fix them.

Let’s dive in!

1. Measuring deployments, not impact

A lot of companies I’ve met that “made the leap into production” track their deployments in metrics like: Models built, tools launched, or pilots completed.

This is great for technical pipeline management. However, to build profitable AI solutions you need to be able to track business impact as well. Things like:

Cycle time reductions

Margin lift per process

Hours saved per week

Conversion rates

etc.

I've been working with a large media company. When I asked how many AI solutions they had in production, they didn't have an exact count. The thing is, it didn't matter.

What everyone did know was their biggest impact deployment – a personalization model had helped transform the company from traditional to digital sales. That single AI investment made a huge difference.

My recommendation is that before you start any AI project, define the business metric it's supposed to move, even if it’s just on a high level. Not "implement AI in customer service”, but "Reduce average response time from 4 hours to 1 hour" or "Pre-fill 60% of all data field entries automatically to cut manual work time."

Even if you can’t always measure the impact directly, being clear about what business metric you want to move is key.

If you can't state the outcome in one sentence, you're not ready to build.

2. Discovery without Thresholds

A friend of mine works at a large bank. He told me they had 50+ AI use cases sitting on the backlog.

"Which ones are the most valuable?" I asked.

He didn't know. Nobody did.

Turns out the list was just a collection of ideas. Every single one now needs to be re-assessed to figure out if it's actually worth pursuing. All that time spent gathering ideas – and now they're basically starting over.

That time could have been spent building the three or four that actually matter.

This is the norm. Companies collect AI ideas without ever asking: what's the minimum return that would make this worth doing?

To avoid this situation, set a threshold before you start. A simple rule works: no AI idea ends up on the backlog unless the problem you're tackling is worth at least $10K or 1,000 hours per year (or whatever your threshold is).

You'd be amazed how many "exciting AI opportunities" die when you apply that filter.

3. There’s no kill switch

A large insurance company I worked with had built an internal ChatGPT clone. Early on, everyone was excited. Leadership loved it. Teams were using it. It’s so innovative!

But at some point it became clear that the thing required heavy ongoing maintenance, especially with the “real” ChatGPT getting better and better and Microsoft being quite aggressive on selling Copilot licenses.

The decision on what to do with the internal tool kept dragging. It became a zombie project. Everyone knew it wasn't worth the effort anymore, but nobody wanted to pull the plug. There weren’t any criteria for when to stop. No permission to fail.

This is what gets commonly criticized as "innovation theatre" and "pilot graveyards". But most companies have no mechanism for killing projects cleanly that somehow ended up in production.

The way I tackle this is to design clear Impact Thresholds and Cost Caps from day 1. If the value can’t be achieved or the cost cap gets exceeded, it’s time to re-assess the project.

Similar to a worker who’s on the payroll but doesn’t show up to work.

No hard feelings when they leave.

4. Mixing up learning and earning

There’s a reason we differentiate between Discovery and Delivery in AI solution development. Mix these two up and you end up with two failure modes:

Kill promising projects too early because they haven't "paid off" yet

Keep zombie projects alive because of sunk-cost bias

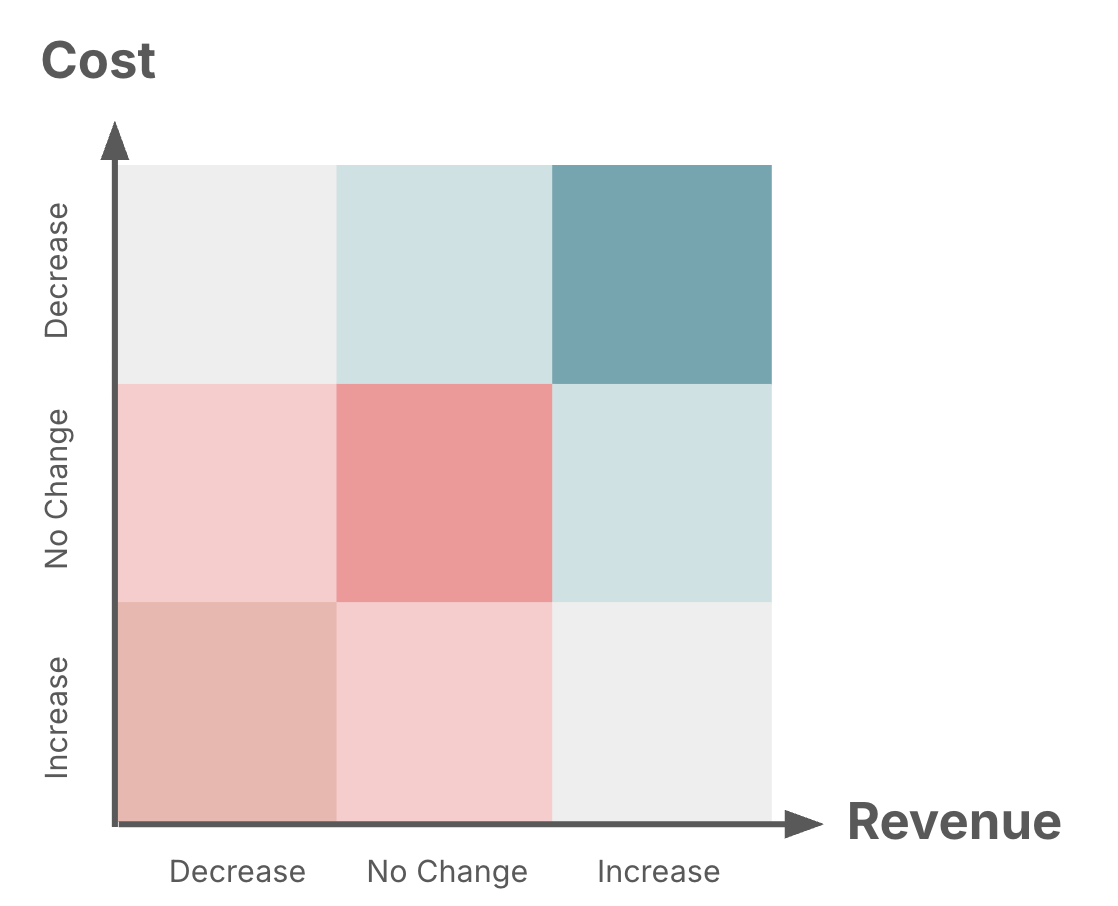

ROI only happens when you cross the blue line into Production.

Building the shipping muscle – to take the one surviving idea and deploy it as a real asset – is the most important skill that counts right now.

But you can’t really get there without learning and failing.

I wrote a Checklist for when an AI solution is ready for prime time (which is less 'things to have' and more 'red flags to avoid'.)

A big win is to be explicit about which phase you're in. Discovery is learning — does this work? Is the data good enough? Can our team actually use it? Delivery is earning — now that we know it works, how do we scale it profitably?

Different phases, different metrics, different expectations.

5. Falling in love with the tech, not the problem

Someone tries ChatGPT for the first time (yes, there are still people!).

Someone sees a competitor announce an "AI initiative".

Someone attends a conference and comes back excited.

Suddenly there's pressure to 'do something with AI.

But that’s more FOMO than real strategy work.

I once had a large NGO come to me asking for a "second brain" – a company-wide knowledge system where anyone could ask questions and get answers from internal documents like project reports etc.

It sounded great in theory. (Everyone would be so much more productive!)

In practice, however, it was technology looking for a problem.

When you dig in these general-purpose "second brain" cases, you’ll quickly realize they typically create more problems than they solve.

Who sees what content?

How do you manage access rights?

How do you keep it updated?

So many questions!

You end up restricting access so much that you might as well have separate solutions anyway.

What actually works is niche, domain-specific knowledge systems. A deep Q&A knowledge base for a product. Another one for customer support. One more for HR. Be fancy and let them speak to each other. But each has its clear ownership, clear scope, and clear value.

The "second brain" vision was exciting. The domain-specific approach was boring. Guess which one delivers results?

I don’t start my AI Opportunity Scan sessions with "what is the latest and greatest AI tech out there." But with “where are we losing money/time/quality right now – and in what cases could AI be a good solution?"

The overlap is really where the money is.

Bottom Line

PwC showed (once more) that many AI investments don't pay off. But knowing the stats doesn't help you make better decisions.

To actually move into winner’s territory, you need a process for validating opportunities before you commit:

Is this opportunity real or am I chasing hype?

What's the minimum ROI that makes this worth doing?

What has to be true for this to work?

How will I know if it's failing – and when do I stop?

I’ve covered most of these steps in my book “The Profitable AI Advantage”. It will give you the frameworks and techniques to set the basis for winning with AI (incl. the parts that even come before you worry about data infrastructure or change management.)

If that sounds useful, you can find the book here or in your favorite bookstore using ISBN 1836205899.

See you next Saturday!

Tobias

Reply